Credit Risk Signals You Can Trust.

What You Can Do

with HEKA

How It Works

Existing Customer Record

Name, ID, date of birth, city — even if incomplete.

Live Data Extraction

Heka scans the open web for real-time behavioural, reputational, and relational signals.

AI-Powered Signal Structuring

Our AI parses noise into patterns, turning raw data into clear signals that reveal risk factors and anomalies — with full traceability.

Actionable, Traceable Output

A structured, explainable result — with indicators showing whether the profile is:

- No risk factor found

- Needs Underwriter Review

- Critical

We return only what you need — clear, evidence-based results, with links to source.

Powered by

Heka’s Identity Intelligence Engine

sources

Explore More Resources

Button Text

The Identity Pivot: Why 2026 is the Year We Stop Fighting AI with AI

The digital trust ecosystem has reached a breaking point. For the last decade, the industry’s defense strategy was built on a simple premise: detecting anomalies in a sea of legitimate behavior. But as we enter 2026, the mechanics of fraud have fundamentally inverted.

With global scam losses crossing $1 trillion and deepfake attacks surging by 3,000%, the line between the authentic and the synthetic has been erased. We are now witnessing the birth of "autonomous fraud" – a landscape where barriers to entry have vanished, and the guardrails are gone.

At Heka, we believe we have reached a critical pivot point. The industry must move beyond the futile arms race of trying to outpace generative models by simply using AI to detect AI. The new objective for heads of fraud and risk leaders is not just detecting attacks; it is verifying life.

Here is how the landscape is shifting in 2026, and why "context" is the only defense left that scales.

The Industrialization of Deception

The most dangerous shift in 2026 is the democratization of high-end attack vectors. What was once the domain of sophisticated syndicates is now accessible to anyone with an internet connection.

This "Fraud as a Service" economy has lowered barriers to entry so drastically that 34% of consumers now report seeing offers to participate in fraud online – an alarmingly steep 89% year-over-year increase.

But the true threat lies in automation. We are witnessing the rise of the "Industrial Smishing Complex." According to insights from the Secret Service, we are seeing SIM farms capable of sending 30 million messages per minute – enough to text every American in under 12 minutes.

This is not just spam; it is a volume game powered by AI agents that never sleep. In the "Pig Butchering 2.0" model, automated scam centers are replacing human labor with AI systems that handle the "hook and line" conversations entirely autonomously. When a single bad actor can launch millions of attacks from a one-bedroom apartment, volume becomes a weapon that overwhelms traditional defenses.

The Rise of the "Shapeshifter" and "Dust" Attacks

Traditional fraud prevention relies on identifying outliers – high-value transactions or unusual behaviors. In 2026, fraudsters have inverted this logic using two distinct strategies:

1. The Shapeshifting Agent

Static rules fail against dynamic adversaries. We are now facing "shapeshifting" AI agents that do not follow pre-defined malware scripts. Instead, these agents learn from friction in real-time. If a transaction is declined, the AI adjusts its tactics instantly, using the rejection data to "shapeshift" into a new attack vector. As noted by risk experts, these agents autonomously navigate trial-and-error loops, rendering static rules useless.

2. "Dust" Trails and Horizontal Attacks

While banks watch for the "big heist," fraud rings are executing "horizontal attacks." By skimming small amounts – often around $50 – from thousands of victims simultaneously, attackers create "dust trails" that stay below the investigation thresholds of major institutions.

Data from Sardine.AI indicates that fraud rings are now using fully autonomous systems to execute these attacks across hundreds of merchants simultaneously. Viewed in isolation, a single $50 charge looks like a normal transaction. It is only when viewed through the lens of web intelligence –seeing the shared infrastructure across the wider web – that the attack becomes visible.

The "Back to Branch" Regression

Perhaps the most alarming trend in 2026 is the erosion of confidence in digital channels. Because AI-generated identities and deepfakes have reached such sophistication, 75% of financial institutions admit their verification technology now produces inconsistent results.

This failure has triggered a defensive regression: the return to physical branches. Gartner estimates that 30% of enterprises no longer trust biometrics alone, leading some banks to demand customers appear in person for identity proofing.

While this stops the immediate bleeding, it is a strategic failure. Forcing customers back to the branch introduces massive friction without solving the core problem. As industry experts note, if a teller reviews a driver's license "as if it's 1995" while facing a fraudster with perfect AI-generated documentation, we are merely adding inconvenience, not security.

The Solution: Context is the New Identity

The issue facing our industry is not a failure of digital identity itself; it is a failure of context.

Trust is fragile when it relies on a single signal, like a document scan or a selfie. In an AI-versus-AI world, seeing is no longer believing. However, while AI can fabricate a driver's license or a video feed, it consistently fails to recreate the messy, organic digital footprint of a real human being.

To survive the 2026 threat landscape, organizations must pivot toward:

1. Web Intelligence: Linking signals together to see the wider web of interactions rather than isolated events.

2. Long-Term, Consistent Presence: analyzing the continuity of an identity over time. Real humans have history. Synthetic identities, no matter how polished, lack the depth of a long-term digital existence.

3. Cross-Channel Consistency: Looking for the shared infrastructure and overlapping identities that horizontal attacks inevitably leave behind.

The 2026 Takeaway

The future offers a clear path forward. Fraud prevention is no longer about beating a single control – it is about bridging the gaps between them.

While identity and behavior are easier to fake in isolation, the real advantage lies in the complexity of real-world signals. These are the signals that remain expensive to manufacture at scale. Organizations that embrace this context-driven approach will do more than just stop the $1 trillion wave of autonomous fraud; they will unlock a seamless experience where trust is automatic.

Stay informed. Stay adaptive. Stay ahead.

At Heka Global, our platform delivers real-time, explainable intelligence from thousands of global data sources to help fraud teams spot non-human patterns, identity inconsistencies, and early lifecycle divergence long before losses occur.

.png)

Why Did So Many Identity Controls Fail in 2025?

2025 marked a turning point in digital identity risk. Fraud didn’t simply become more sophisticated – it became industrialized. What emerged across financial institutions was not a new fraud “type,” but a new production model: fraud operations shifted from human-led tactics to system-led pipelines capable of assembling identities, navigating onboarding flows, and adapting to defenses at machine speed.

Synthetic identities, account takeover attempts, and document fraud didn’t just rise in volume; they became more operationally consistent, more repeatable, and more automated. Fraud rings began functioning less like informal criminal networks and more like tech companies: deploying AI agents, modular tooling, continuous integration pipelines, and automated QA-style probing of institutional controls.

This is why so many identity controls failed in 2025. They were calibrated for adversaries who behave like people.

Automation Became the Default Operating Mode

The most consequential development of 2025 was the normalization of autonomous or semi-autonomous fraud workflows. AI agents began executing tasks traditionally requiring human coordination: assembling identity components, navigating onboarding flows, probing rule thresholds, and iterating on failures in real time. Anthropic’s September findings – documenting agentic AI gaining access to confirmed high-value targets – validated what fraud teams were already observing: the attacker is no longer just an individual actor but a persistent, adaptive system.

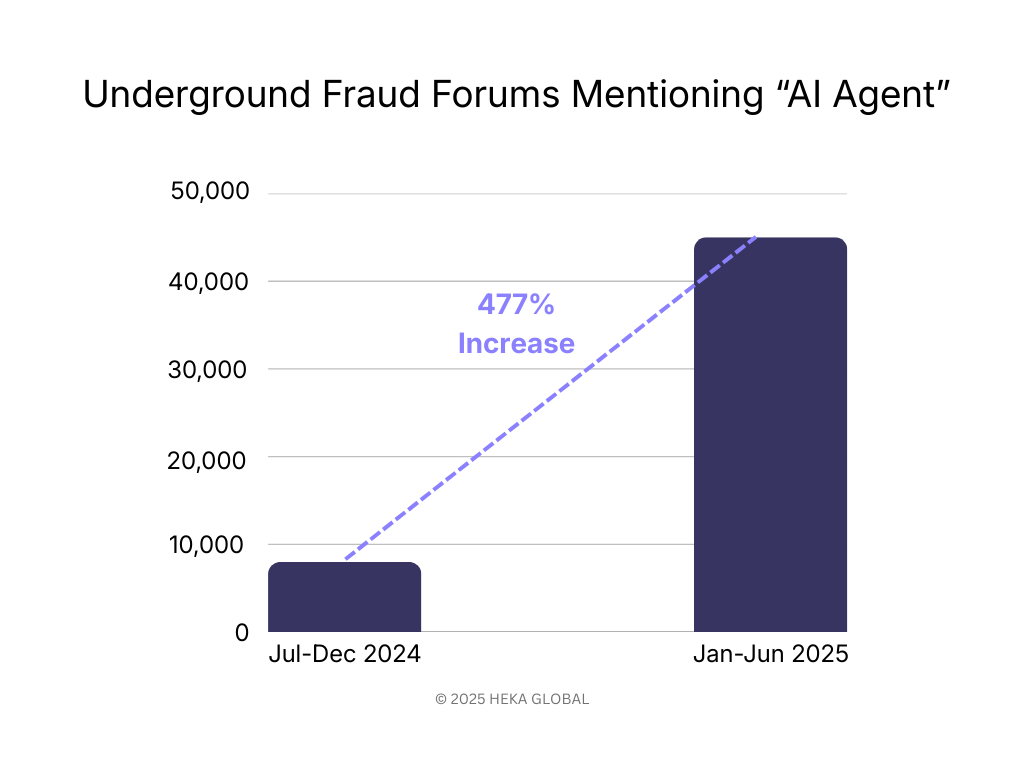

According to Visa, activity across their ecosystem shows clear evidence of an AI shift. Mentions of “AI Agent” in underground forums have surged 477%, reflecting how quickly fraudsters are adopting autonomous systems for social engineering, data harvesting, and payment workflows.

Operational consequences were immediate:

- Attempt volumes exceeded human-constrained detection models

- Timing patterns became too consistent for human-based anomaly rules

- Retries and adjustments became systematic rather than opportunistic

- Session structures behaved more like software than people

- Attacks ran continuously, unaffected by time zones, fatigue, or manual bottlenecks

Controls calibrated for human irregularity struggled against machine-level consistency. The threat model had shifted, but the control model had not.

Synthetic Identity Production Reached Industrial Scale

2025 also saw the industrialization of synthetic identity creation – driven by both generative AI and the rapid expansion of fraud-as-a-service (FaaS) marketplaces. What previously required technical skill or bespoke manual work is now fully productized. Criminal marketplaces provide identity components, pre-validated templates, and automated tooling that mirror legitimate SaaS workflows.

.jpg)

These marketplaces supply:

- AI-generated facial images and liveness-passing videos

- Country-specific forged document packs

- Pre-scraped digital footprints from public and commercial sources

- Bulk synthetic identity templates with coherent PII

- Automated onboarding scripts designed to work across popular IDV vendors

- APIs capable of generating thousands of synthetic profiles at once

- And more…

This ecosystem eliminated traditional constraints on identity fabrication. In North America, synthetic document fraud rose 311% year-on-year. Globally, deepfake incidents surged 700%. And with access to consumer data platforms like BeenVerified, fraud actors needed little more than a name to construct a plausible identity footprint.

The critical challenge was not just volume, but coherence: synthetic identities were often too clean, too consistent, and too well-structured. Legacy controls interpret clean data as low risk. But today, the absence of noise is often the strongest indicator of machine-assembled identity.

Because FaaS marketplaces standardized production, institutions began seeing near-identical identity patterns across geographies, platforms, and product types – a hallmark of industrialized fraud. Controls validated what “existed,” not whether it reflected a real human identity. That gap widened every quarter in 2025.

Where Identity Controls Reached Their Limits

As fraud operations industrialized, several foundational identity controls reached structural limits. These were not tactical failures; they reflected the fact that the underlying assumptions behind these controls no longer matched the behavior of modern adversaries.

Device intelligence weakened as attackers shifted to hardware

For years, device fingerprinting was a strong differentiator between legitimate users and automated or high-risk actors. This vulnerability was exposed by Europol’s Operation SIMCARTEL in October 2025, one of many recent cases where criminals used genuine hardware and SIM box technology, specifically 40,000 physical SIM cards, to generate real, high-entropy device signals that bypassed checks. Fraud rings moved from spoofing devices to operating them at scale, eroding the effectiveness of fingerprinting models designed to catch software-based manipulation.

Knowledge-based authentication effectively collapsed

With PII volume at unprecedented levels and AI retrieval tools able to surface answers instantly, knowledge-based authentication no longer correlated with human identity ownership. Breaches like the TransUnion incident in late August 2025, which exposed 4.4 million sensitive records, flood the dark web with PII. These events provide bad actors with the exact answers needed to bypass security questions, and when paired with AI retrieval tools, render KBA controls defenseless. What was once a fallback escalated into a near-zero-value signal.

Rules were systematically reverse-engineered

High-volume, automated adversarial probing enabled fraud actors to map rule thresholds with precision. UK Finance and Cifas jointly reported 26,000 ATO attempts engineered to stay just under the £500 review limit. Rules didn’t fail because they were poorly designed. They failed because automation made them predictable.

Lifecycle gaps remained unprotected

Most controls still anchor identity validation to isolated events – onboarding, large transactions, or high-friction workflows. Fraud operations exploited the unmonitored spaces in between:

- contact detail changes

- dormant account reactivation

- incremental credential resets

- low-value testing

Legacy controls were built for linear journeys. Fraud in 2025 moved laterally.

What 2026 Fraud Strategy Now Requires

The institutions that performed best in 2025 were not the ones with the most tools – they were the ones that recalibrated how identity is evaluated and how fraud is expected to behave. The shift was operational, not philosophical: identity is no longer an event to verify, but a system to monitor continuously.

Three strategic adjustments separated resilient teams from those that saw the highest loss spikes.

1. Treat identity as a longitudinal signal, not a point-in-time check

Onboarding signals are now the weakest indicators of identity integrity. Fraud prevention improved when teams shifted focus to:

- behavioral drift over time

- sequence patterns across user journeys

- changes in device, channel, or footprint lineage

- reactivation profiles on dormant accounts

Continuous identity monitoring is replacing traditional KYC cadence. The strongest institutions treated identity as something that must prove itself repeatedly, not once.

2. Incorporate external and open-web intelligence into identity decisions

Industrialized fraud exploits the gaps left by internal-only models. High-performing institutions widened their aperture and integrated signals from:

- digital footprint depth and entropy

- cross-platform identity reuse

- domain/phone/email lineage

- web presence maturity

- global device networks and associations

These signals exposed synthetics that passed internal checks flawlessly but could not replicate authentic, long-term human activity on the open web.

Identity integrity is now a multi-environment assessment, not an internal verification process.

3. Detect automation explicitly

Most fraud in 2025 exhibited machine-level regularity – predictable timing, optimized retries, stable sequences. Teams that succeeded treated automation as a primary signal, incorporating:

- micro-timing analysis

- session-structure profiling

- velocity and retry pattern detection

- non-human cadence modeling

Fraud no longer “looks suspicious”; it behaves systematically. Detection must reflect that.

4. Shift from tool stacks to orchestration

Fragmented fraud stacks produced fragmented intelligence. Institutions saw the strongest improvements when they unified:

- IDV

- behavioral analytics

- device and network intelligence

- OSINT and digital footprint context

- transaction and account-change data

into a single, coherent decision layer. Data orchestration provided two outcomes legacy stacks could not:

- Contextual scoring – identities evaluated across signals, not in isolation

- Consistent policy application – reducing false positives and operational drag

The shift isn’t toward more controls; it is toward coordination.

Closing Perspective

Identity controls didn’t fail in 2025 because institutions lacked capability. They failed because the models underpinning those controls were anchored to a world where identity was stable, fraud was manual, and behavioral irregularity differentiated good actors from bad.

In 2025, identity became dynamic and distributed. Fraud became industrialized and system-led.

Institutions that recalibrate their approach now – treating identity as a living system, integrating external context, and unifying decisioning layers – will be best positioned to defend against the operational realities of 2026.

At Heka Global, our platform delivers real-time, explainable intelligence from thousands of global data sources to help fraud teams spot non-human patterns, identity inconsistencies, and early lifecycle divergence long before losses occur.

The New Faces of Fraud: How AI Is Redefining Identity, Behavior, and Digital Risk

1. Introduction – Identity Is No Longer a Fixed Attribute

The biggest shift in fraud today isn’t the sophistication of attackers – it’s the way identity itself has changed.

AI has blurred the boundaries between real and fake. Identities can now be assembled, morphed, or automated using the same technologies that power legitimate digital experiences. Fraudsters don’t need to steal an identity anymore; they can manufacture one. They don’t guess passwords manually; they automate the behavioral patterns of real users. They operate across borders, devices, and platforms with no meaningful friction.

The scale of the problem continues to accelerate. According to the Deloitte Center for Financial Services, synthetic identity fraud is expected to reach US $23 billion in losses by 2030. Meanwhile, account takeover (ATO) activity has risen by nearly 32% since 2021, with an estimated 77 million people affected, according to Security.org. These trends reflect not only rising attack volume, but the widening gap between how identity operates today and how legacy systems attempt to secure it.

This isn’t just “more fraud.” It’s a fundamental reconfiguration of what identity means in digital finance – and how easily it can be manipulated. Synthetic profiles that behave like real customers, account takeovers that mimic human activity, and dormant accounts exploited at scale are no longer anomalies. They are a logical outcome of this new system.

The challenge for banks, neobanks, and fintechs is no longer verifying who someone is, but understanding how digital entities behave over time and across the open web.

2. The Blind Spots in Modern Fraud Prevention

Most fraud stacks were built for a world where:

- identity was stable

- behavior was predictable

- fraud required human effort

Today’s adversaries exploit the gaps in that outdated model.

Blind Spot 1 — Static Identity Verification

Traditional KYC treats identity as fixed. Synthetic profiles exploit this entirely by presenting clean credit files, plausible documents, and AI-generated faces that pass onboarding without friction.

Blind Spot 2 — Device and Channel Intelligence

Legacy device fingerprinting and IP checks no longer differentiate bots from humans. AI agents now mimic device signatures, geolocation drift, and even natural session friction.

Blind Spot 3 — Transaction-Centric Rules

Fraud rarely begins with a transaction anymore. Synthetics age accounts for months, ATO attackers update contact information silently, and dormant accounts remain inactive until the moment they’re exploited.

In short: fraud has become dynamic; most defenses remain static.

3. The Changing Nature of Digital Identity

For decades, digital identity was treated as a stable set of attributes: a name, a date of birth, an address, and a document. The financial system – and most fraud controls – were built around this premise. But digital identity in 2025 behaves very differently from the identities these systems were designed to protect.

Identity today is expressed through patterns of activity, not static attributes. Consumers interact across dozens of platforms, maintain multiple email addresses, replace devices frequently, and leave fragmented traces across the open web. None of this is inherently suspicious – it’s simply the consequence of modern digital life.

The challenge is that fraudsters now operate inside these same patterns.

A synthetic identity can resemble a thin-file customer.

An ATO attacker can look like a user switching devices.

A dormant account can appear indistinguishable from legitimate inactivity.

In other words, the difficulty is not that fraudsters hide outside normal behavior – it is that the behavior considered “normal” has expanded so dramatically that older models no longer capture its boundaries.

This disconnect between how modern identity behaves and how traditional systems verify it is precisely what makes certain attack vectors so effective today. Synthetic identities, account takeovers, and dormant-account exploitation thrive not because they are new techniques, but because they operate within the fluid, multi-channel reality of contemporary digital identity – where behavior shifts quickly, signals are fragmented, and legacy controls cannot keep pace.

4. Synthetic IDs: Fraud With No Victim and No Footprint

Synthetic identities combine real data fragments with fabricated details to create a customer no institution can validate – because no real person is missing. This gives attackers long periods of undetected activity to build credibility.

Fraudsters use synthetics to:

- open accounts and credit lines,

- build transaction history,

- establish low-risk behavioral patterns,

- execute high-value bust-outs that are difficult to recover.

Why synthetics succeed

- Thin-file customers look similar to fabricated identities.

- AI-generated faces and documents bypass superficial verification.

- Onboarding flows optimized for user experience leave less room for deep checks.

- Synthetic identities “warm up” gradually, behaving consistently for months.

Equifax estimates synthetics now account for 50–70% of credit fraud losses among U.S. banks.

What institutions must modernize

One-time verification cannot identify a profile that was never tied to a real human. Institutions need ongoing, external intelligence that answers a different question:

Does this identity behave like an actual person across the real web?

5. Account Takeover: When Verified Identity Becomes the Attack Surface

Account takeover (ATO) is particularly difficult because it begins with a legitimate user and legitimate credentials. Financial losses tied to ATO continue to grow. VPNRanks reports a sustained increase in both direct financial impact and the volume of compromised accounts, further reflecting how identity-based attacks have become central to modern fraud.

Fraudsters increasingly use AI to automate:

- credential-stuffing attempts,

- session replay and friction simulation,

- device and browser mimicry,

- navigation patterns that resemble human users.

Once inside, attackers move quickly to secure control:

- updating email addresses and phone numbers,

- adding new devices,

- temporarily disabling MFA,

- initiating transfers or withdrawals.

Signals that matter today

Early indicators are subtle and often scattered:

- Email change + new device within a short window

- Logins from IP ranges linked to synthetic identity clusters

- High-velocity credential attempts preceding a legitimate login

- Sudden extensions of the user’s online footprint

- Contact detail changes followed by credential resets

The issue is not verifying credentials; it is determining whether the behavior matches the real user.

6. Dormant Accounts: The Silent Fraud Vector

Dormant or inactive accounts, once considered low-risk, have become reliable targets for fraud. Their inactivity provides long periods of concealment, and they often receive less scrutiny than active accounts. This makes them attractive staging grounds for synthetic identities, mule activity, and small-value laundering that can later escalate.

Fraudsters use dormant accounts because they represent the perfect blend of low visibility and high permission: the infrastructure of a legitimate customer without the scrutiny of an active one.

Why dormant ≠ low-risk

Dormant accounts are vulnerable because of their inactivity – not in spite of it.

- They bypass many ongoing monitoring rules.

Most systems deprioritize accounts with no transactional activity. - Attackers can prepare without triggering alerts.

Inactivity hides credential testing, information gathering, and initial contact-detail changes. - Reactivation flows are often weaker than onboarding flows.

Institutions assume returning customers are inherently trustworthy. - Contact updates rarely raise suspicion.

A fraudster changing an email or phone number on a dormant account is often treated as routine. - Fraud can accumulate undetected for long periods.

Months or years of dormancy create a wide window for planning, staging, and lateral movement.

Better defenses

Institutions benefit from:

- refreshing identity lineage at the moment of reactivation,

- updating digital-footprint context rather than relying on historical data,

- linking dormant accounts to known synthetic or mule clusters.

Dormant ≠ safe. Dormant = unobserved.

7. How Modern Fraud Actually Operates (AI + Lifecycle)

Fraud today is not opportunistic. It is operational, coordinated, and increasingly automated.

How AI amplifies fraud operations

AI enables fraudsters to automate tasks that were once slow or manual:

- Identity creation: synthetic faces, forged documents, fabricated businesses

- Scalable onboarding: bots submitting high volumes of applications

- Behavioral mimicry: friction simulation, geolocation drift, session replay

- Customer-support evasion: LLM agents bypassing KBA or manipulating staff

- OSINT mining: automated scraping of breached data and persona fragments

This automation feeds into a consistent operational lifecycle.

The modern fraud lifecycle

- Identity Fabrication

AI assembles identity components designed to pass onboarding. - Frictionless Onboarding

Attackers target institutions with low-friction digital processes. - Seasoning or Dormancy

Accounts age quietly, building legitimacy or remaining inactive. - Account Manipulation

Email, phone, and device updates prepare the account for monetization. - Monetization & Disappearance

Funds move quickly – often across jurisdictions – before detection.

Most institutions detect fraud in Stage 5. Modern prevention requires detecting divergence in Stages 1–4.

8. Rethinking Defense: From Static Checks to Continuous Intelligence

Fraud has evolved from discrete events to continuous identity manipulation. Defenses must do the same. This shift is fundamental:

Institutions must understand identity the way attackers exploit it – as something dynamic, contextual, and shaped by behavior over time.

9. Conclusion

Fraud is becoming faster, more coordinated, and scaling at levels never seen before. Institutions that adapt will be those that begin viewing it as a continuously evolving system.

Those that win the next phase of this battle will stop relying on static checks and begin treating identity as something contextual and continuously evolving.

That requires intelligence that looks beyond internal systems and into the open web, where digital footprints, behavioral signals, and online history reveal whether an identity behaves like a real person, or a synthetic construct designed to exploit the gaps.

At Heka Global, our platform delivers real-time, explainable intelligence from thousands of global data sources to help fraud teams spot non-human patterns, identity inconsistencies, and early lifecycle divergence long before losses occur.

In an AI-versus-AI world, timing is everything. The earlier your system understands an identity, the sooner you can stop the threat.

Heka Raises $14M to bring Real-Time Identity Intelligence to Financial Institutions

FOR IMMEDIATE RELEASE

Heka Raises $14M to bring Real-Time Identity Intelligence to Financial Institutions

Windare Ventures, Barclays and other institutional investors back Heka’s AI engine as financial institutions seek stronger defenses against synthetic fraud and identity manipulation.

New York, 15 July 2025

Consumer fraud is at an all-time high. Last year, losses hit $12.5 billion – a 38% jump year-over-year. The rise is fueled by burner behavior, synthetic profiles, and AI-generated content. But the tools meant to stop it – from credit bureau data to velocity models – miss what’s happening online. Heka was built to close that gap.

Inspired by the tradecraft of the intelligence community, Heka analyzes how a person actually behaves and appears across the open web. Its proprietary AI engine assembles digital profiles that surface alias use, reputational exposure, and behavioral anomalies. This helps financial institutions detect synthetic activity, connect with real customers, and act faster with confidence.

At the core of Heka’s web intelligence engine is an analyst-grade AI agent. Unlike legacy tools that rely on static files, scores, or blacklists, Heka’s AI processes large volumes of web data to produce structured outputs like fraud indicators, updated contact details, and contextual risk signals. In one recent deployment with a global payment processor, Heka’s AI engine caught 65% of account takeover losses without disrupting healthy user activity.

Heka is already generating millions in revenue through partnerships with banks, payment processors, and pension funds. Clients use Heka’s intelligence to support critical decisions from fraud mitigation to account management and recovery. The $14 million Series A round, led by Windare Ventures with participation by Barclays, Cornèr Banca, and other institutional investors, will accelerate Heka’s U.S. expansion and deepen its footprint across the UK and Europe.

“Heka’s offering stood out for its ability to address a critical need in financial services – helping institutions make faster, smarter decisions using trustworthy external data. We’re proud to support their continued growth as they scale in the U.S.” said Kester Keating, Head of US Principal Investments at Barclays.

Ori Ashkenazi, Managing Partner at Windare Ventures, added: “Identity isn’t a fixed file anymore. It’s a stream of behavior. Heka does what most AI can’t: it actually works in the wild, delivering signals banks can use seamlessly in workflows.”

Heka was founded by Rafael Berber, former Global Head of Equity Trading at Merrill Lynch; Ishay Horowitz, a senior officer in the Israeli intelligence community; and Idan Bar-Dov, a fintech and high-tech lawyer. The broader team includes intel analysts, data scientists, and domain experts in fraud, credit, and compliance.

“The credit bureaus were built for another era. Today, both consumers and risk live online. Heka’s mission is to be the default source of truth for this new digital reality – always-on, accurate, and explainable.” said Idan Bar-Dov, the Co-founder and CEO of Heka.

About Heka

Heka delivers web intelligence to financial services. Its AI engine is used by banks, payment processors, and pension funds to fill critical blind spots in fraud mitigation, credit-decision, and account recovery. The company was founded in 2021 and is headquartered in New York and Tel Aviv.

Press contact

Joy Phua Katsovich, VP Marketing | joy@hekaglobal.com